Thomas Wimmer

PhD Student at the Max Planck ETH Center for Learning Systems (CLS).

Hi! I am currently pursuing a PhD through the Max Planck ETH Center for Learning Systems (CLS) and ELLIS programs. My advisors are Jan Eric Lenssen, Bernt Schiele, Christian Theobalt (MPI), and Siyu Tang (ETH).

I previously graduated from my double master’s degree at the Technical University of Munich and the Institut Polytechnique de Paris. During my studies, I have had the chance to work with various great people, including Daniel Cremers, Maks Ovsjanikov, Peter Wonka, and Federico Tombari.

My main research interests lie at the intersection of computer vision, computer graphics, and geometry processing, focusing on (dynamic) 3D scene understanding, reconstruction, and generation, as well as visual semantics. However, I am always open to new ideas and collaborations in related fields. This website gives you an overview of my recent research and other projects.

news

| Oct 14, 2025 | New pre-print: “AnyUp: Universal Feature Upsampling” is now available on arXiv! Super excited to share this work, where we propose a first-of-its-kind feature-agnostic upsampling architecture that can upsample features from any vision model at any resolution, without requiring any encoder-specific training. New state-of-the-art results on multiple downstream benchmarks, while being the first upsampler that naturally generalizes to different feature types at inference time. |

|---|---|

| Jun 05, 2025 | New pre-print: “Do It Yourself: Learning Semantic Correspondence from Pseudo-Labels” is now available on arXiv! We show that foundational features can be refined with an adapter that is trained with pseudo-labels, which are themselves zero-shot predictions using the same foundational features. We improve the quality of pseudo-labels through 3D-aware chaining with cycle-consistency and reject wrong pairs using a spherical prototype. New state-of-the-art results on SPair71k and scalable to larger datasets. Accepted to ICCV 2025! |

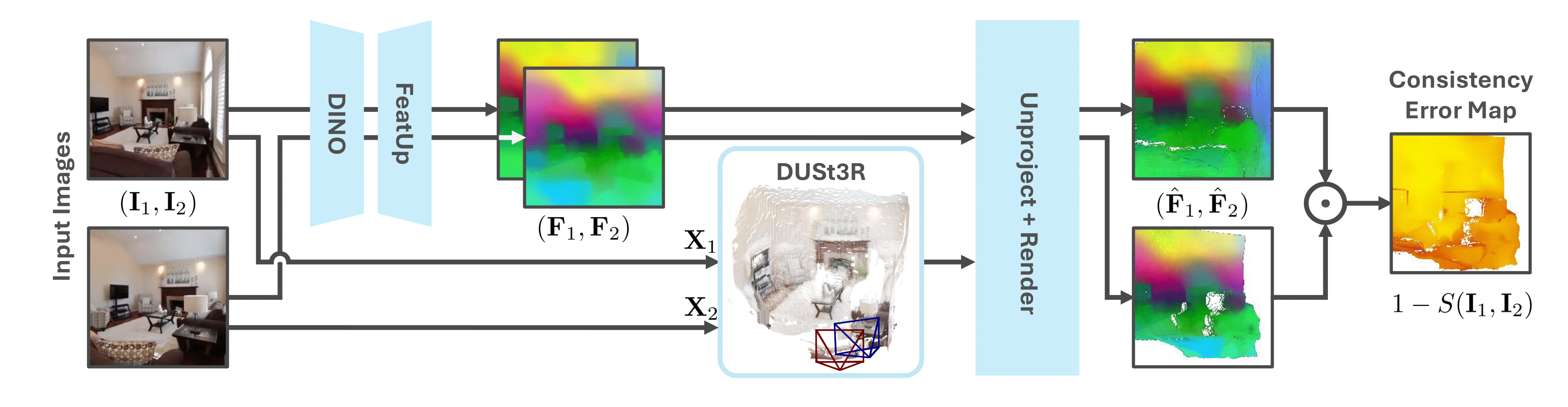

| Jan 12, 2025 | Our pre-print “MEt3R: Measuring Multi-View Consistency in Generated Images” is now available on arXiv! In this work, we propose a DUSt3R-based method to measure multi-view consistency which can, e.g., be used to evaluate the 3D consistency of video diffusion models. Accepted to CVPR 2025! |

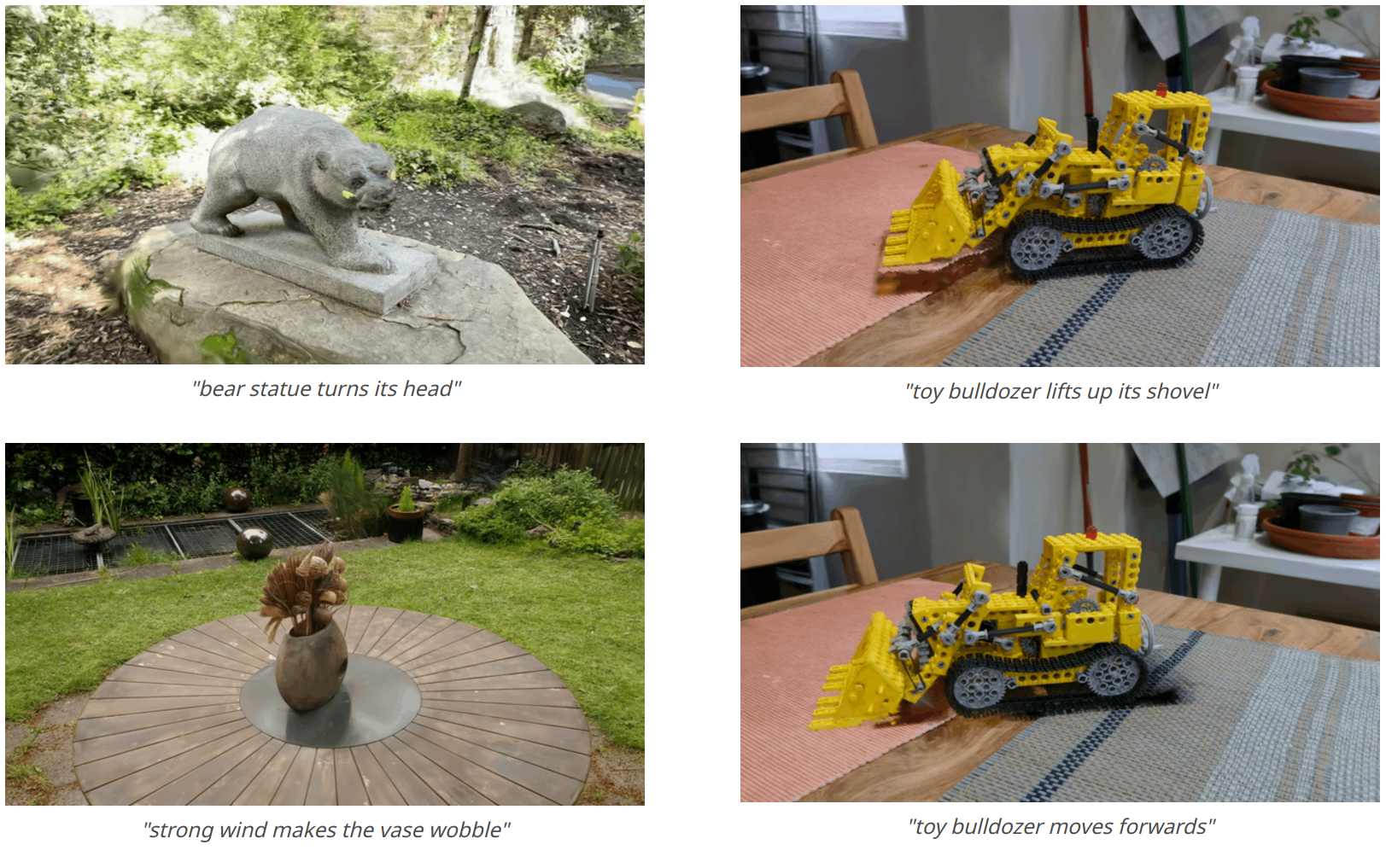

| Nov 05, 2024 | Happy to report that my latest paper, “Gaussians-to-Life: Text-Driven Animation of 3D Gaussian Splatting Scenes”, was accepted for publication at 3DV 2025. Thanks for a great collaboration to my co-authors, Michael Oechsle, Michael Niemeyer, and Federico Tombari! |