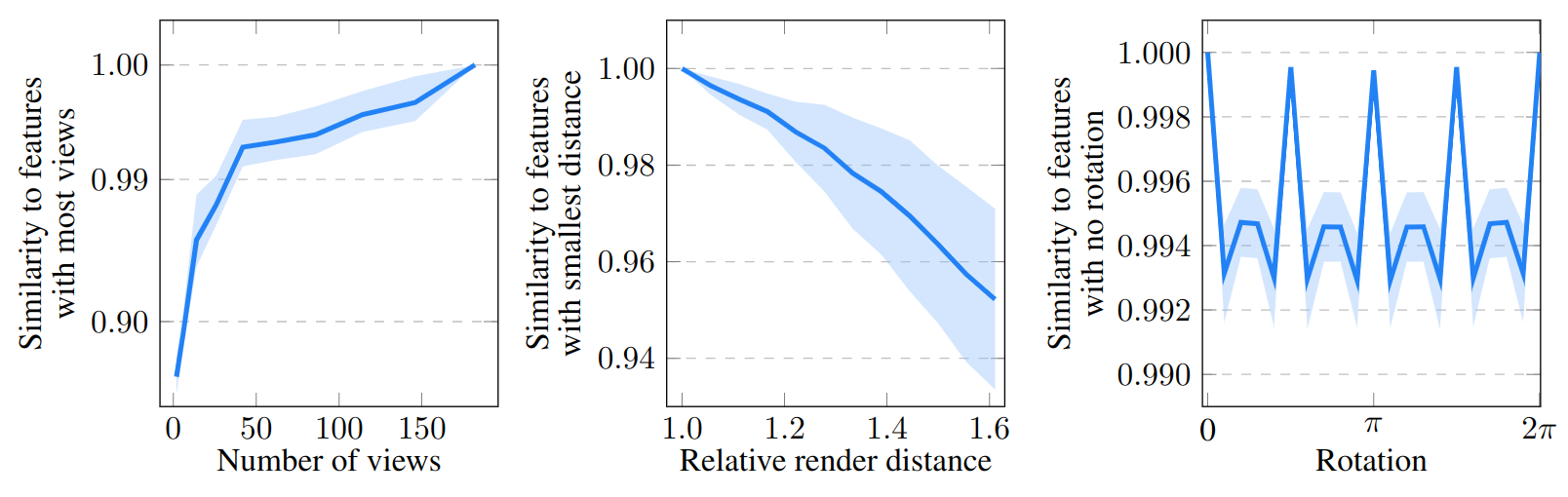

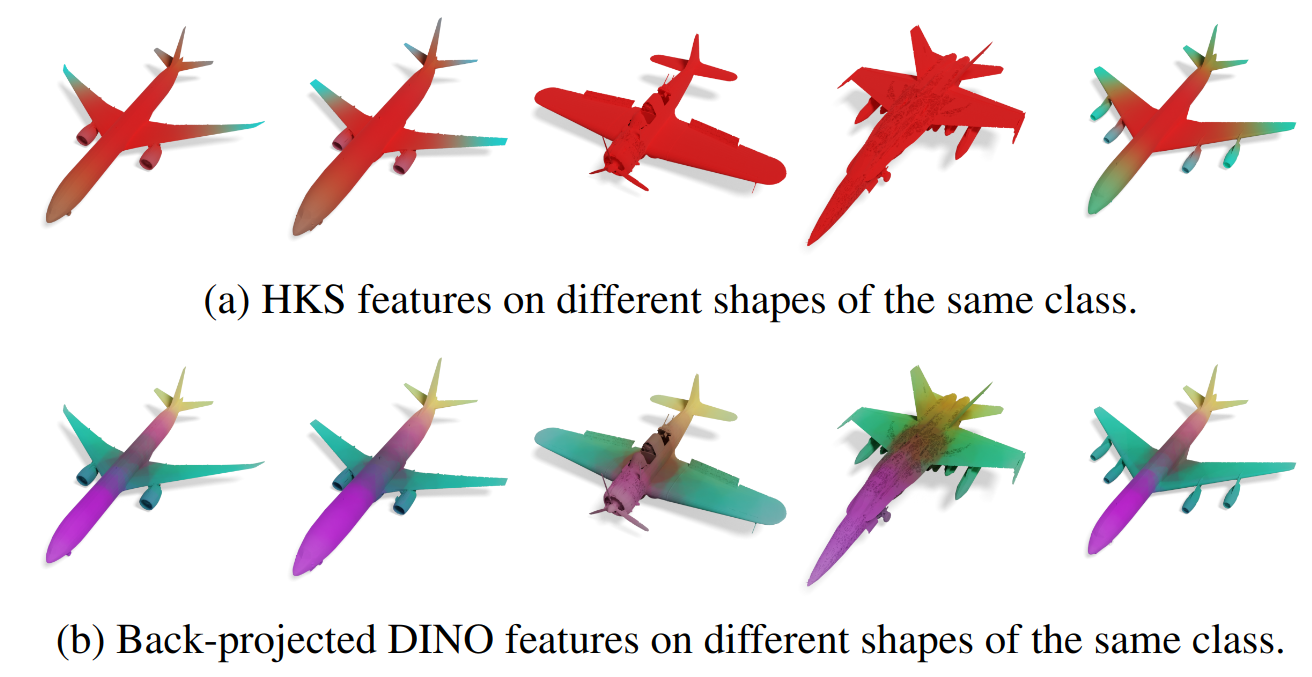

With the immense growth of dataset sizes and computing resources in recent years, so-called foundation models have become popular in NLP and vision tasks. In this work, we propose to explore foundation models for the task of keypoint detection on 3D shapes. A unique characteristic of keypoint detection is that it requires semantic and geometric awareness while demanding high localization accuracy. To address this problem, we propose, first, to back-project features from large pre-trained 2D vision models onto 3D shapes and employ them for this task. We show that we obtain robust 3D features that contain rich semantic information and analyze multiple candidate features stemming from different 2D foundation models. Second, we employ a keypoint candidate optimization module which aims to match the average observed distribution of keypoints on the shape and is guided by the back-projected features. The resulting approach achieves a new state of the art for few-shot keypoint detection on the KeyPointNet dataset, almost doubling the performance of the previous best methods.

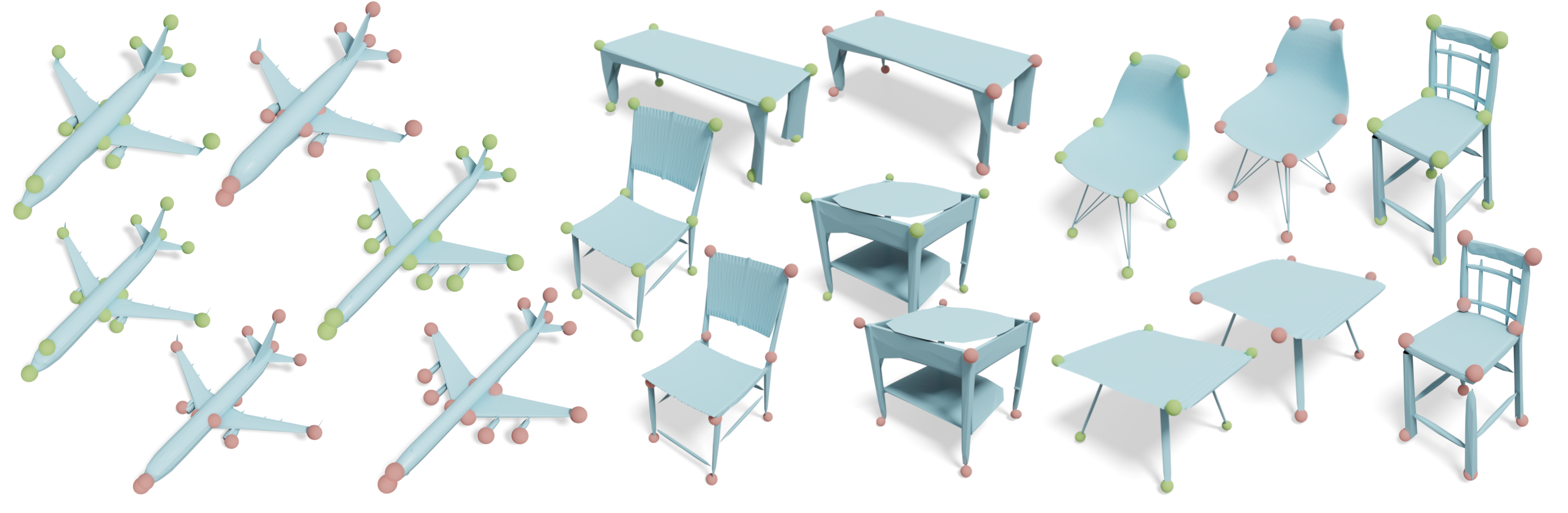

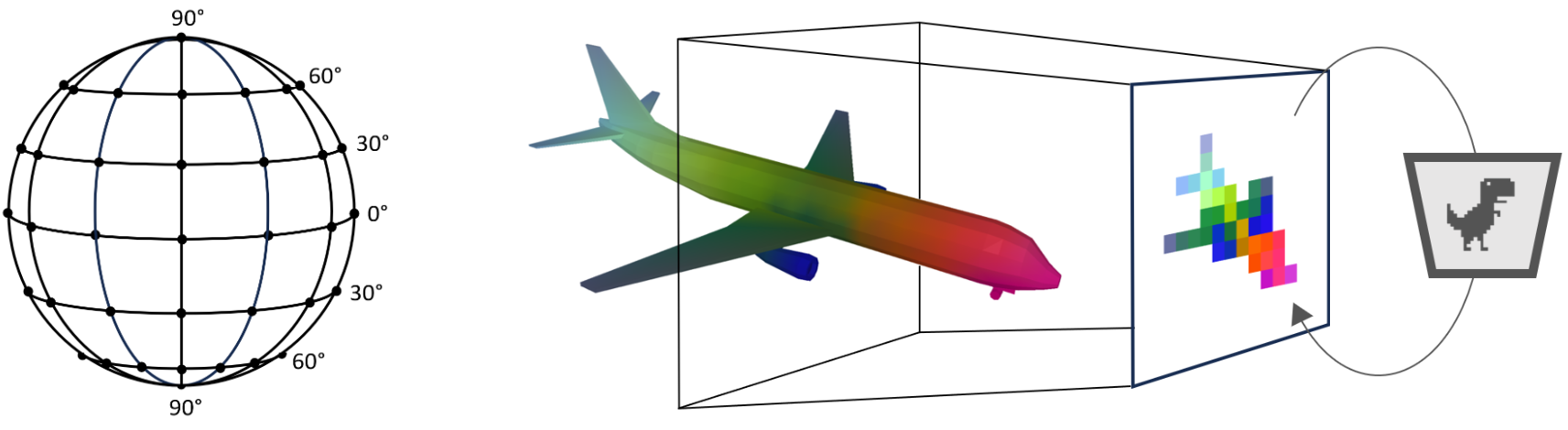

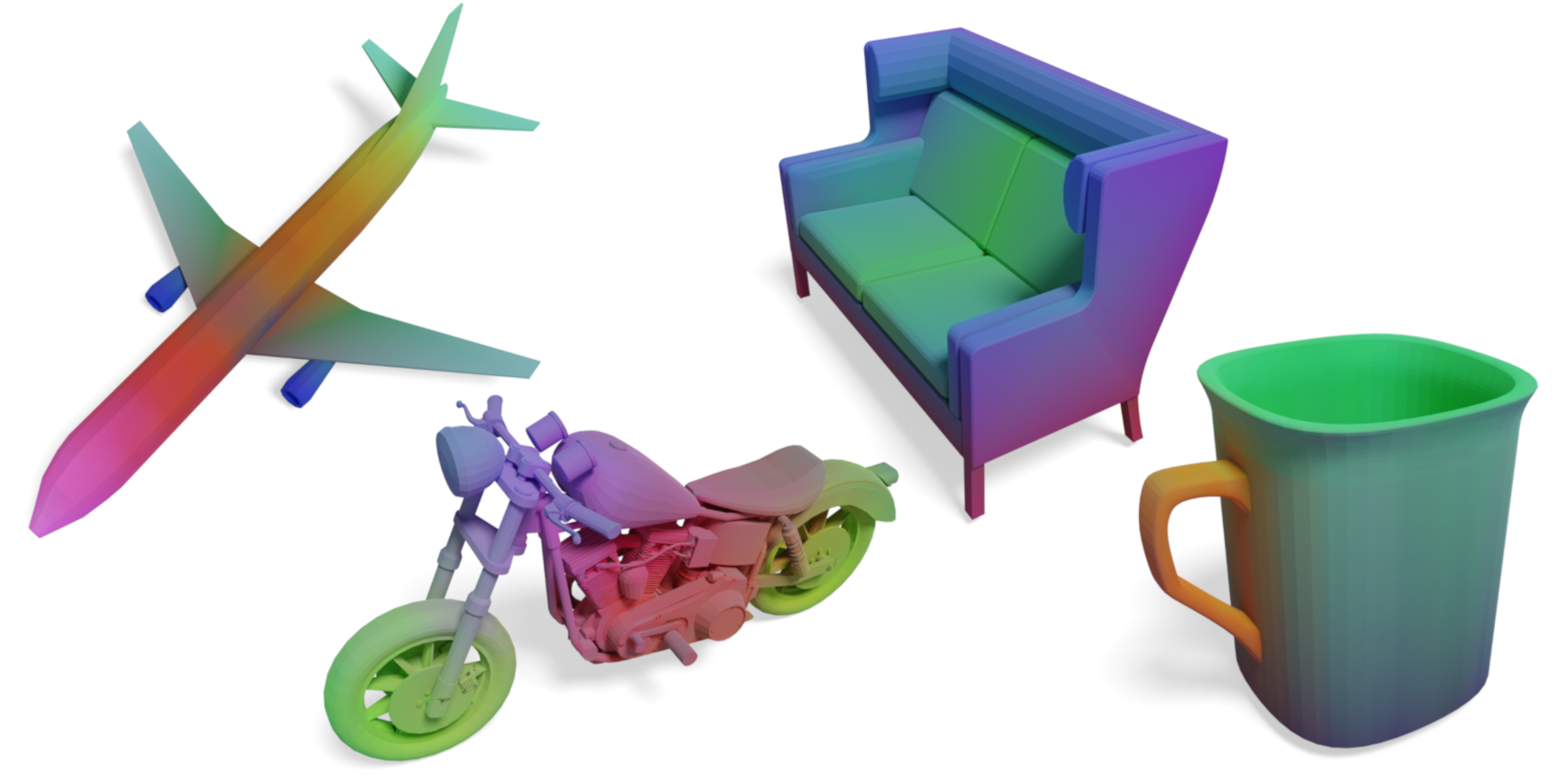

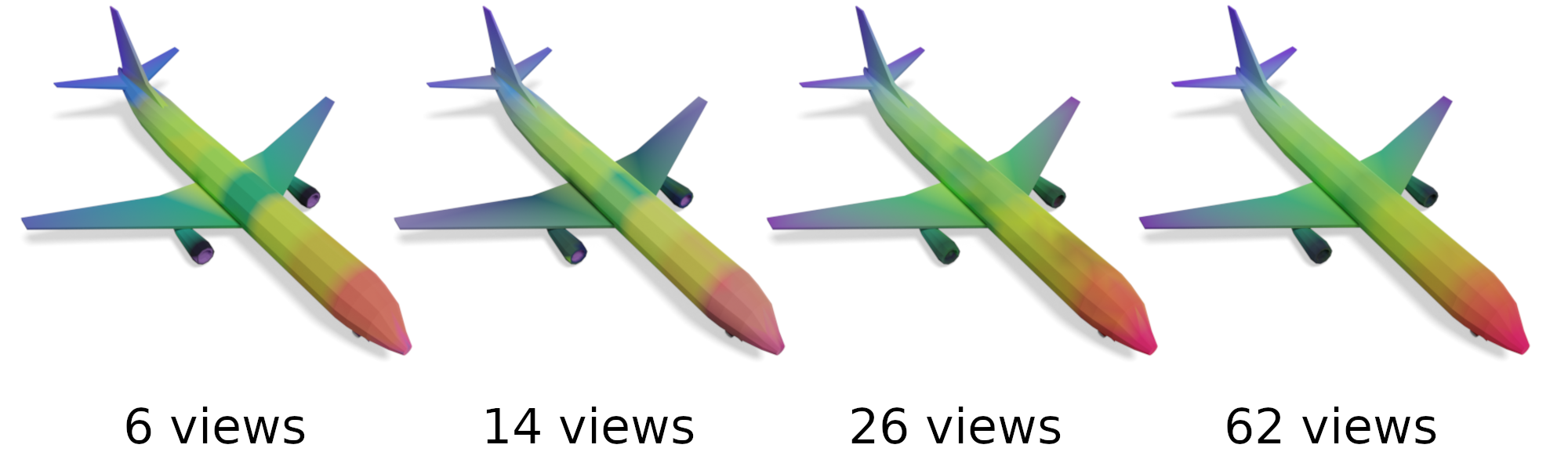

We consider the few-shot keypoint detection problem. For this, our overall strategy consists of transferring the keypoints from the labeled (source) shapes onto the unlabeled (target) one. Our solution comprises two main components: a point similarity component which measures the similarity between vertices of the source and target shapes, and an optimization block which aims to preserve the overall distribution of keypoints and prevent collapses, e.g., due to symmetries. Crucially, for point similarity, we propose to back-project features given by 2D foundation models onto the 3D shapes. We call our method B2-3D.

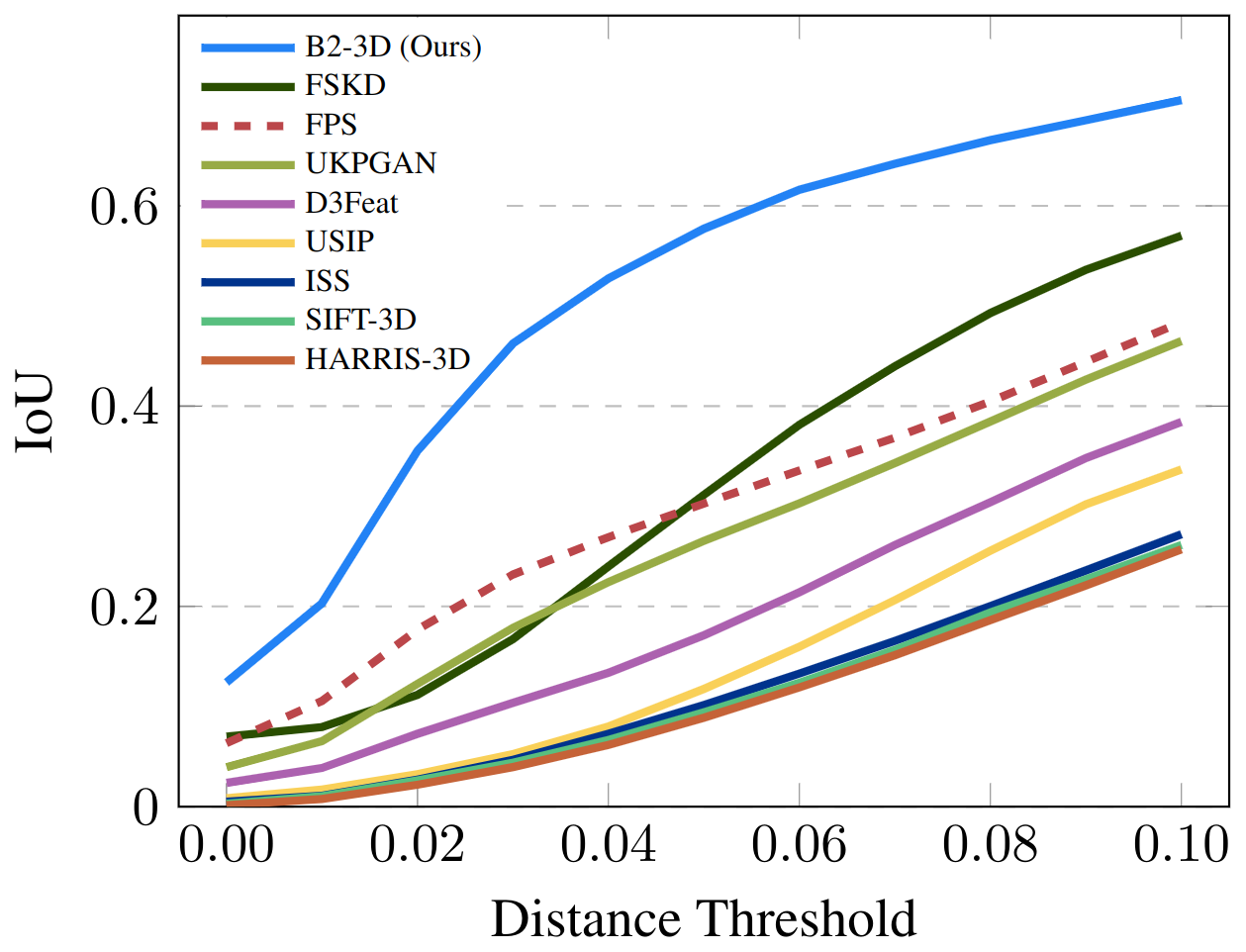

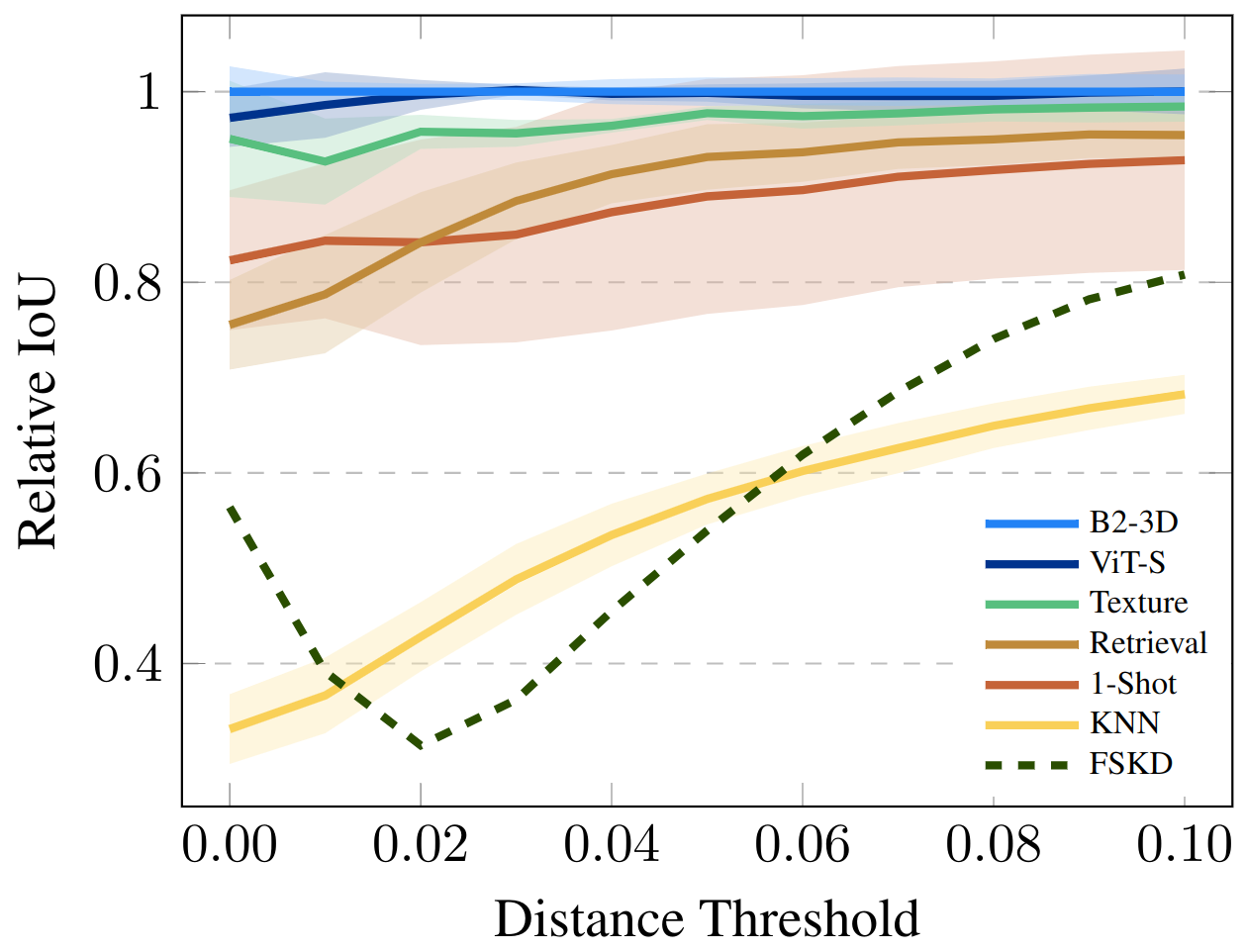

We evaluate our method on the KeyPointNet dataset and compare it to the state-of-the-art few-shot keypoint detection methods. Using back-projected DINO features and our keypoint candidate optimization module, we outperform the previous best method by a large margin (on average 93% IoU improvement). We find that only one labeled sample already provides good guidance for the optimization [1-Shot], and show the efficacy of our proposed optimization module by comparing against a simple nearest-neighbor search [KNN]. We further underline the strong generalizability of the back-projected features by evaluating them on the task of part segmentation transfer on the ShapeNet Part dataset. Using a KNN-classification in the feature space, our method outperforms the previous state-of-the-art NCP by almost 2% in mIoU.

Thomas Wimmer is supported by the Konrad Zuse School of Excellence in Learning and Intelligent Systems (ELIZA) through the DAAD programme Konrad Zuse Schools of Excellence in Artificial Intelligence, sponsored by the German Federal Ministry of Education and Research. Parts of this work were supported by the ERC Starting Grant No. 758800 (EXPROTEA), the ANR AI Chair AIGRETTE, as well as gifts from Adobe and Ansys Inc.

@inproceedings{wimmer2023back,

title={Back to 3D: Few-Shot 3D Keypoint Detection with Back-Projected 2D Features},

author={Wimmer, Thomas and Wonka, Peter and Ovsjanikov, Maks},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

year={2024}

}