State-of-the-art novel view synthesis methods achieve impressive results for multi-view captures

of static 3D scenes. However, the reconstructed scenes still lack “liveliness,” a key component

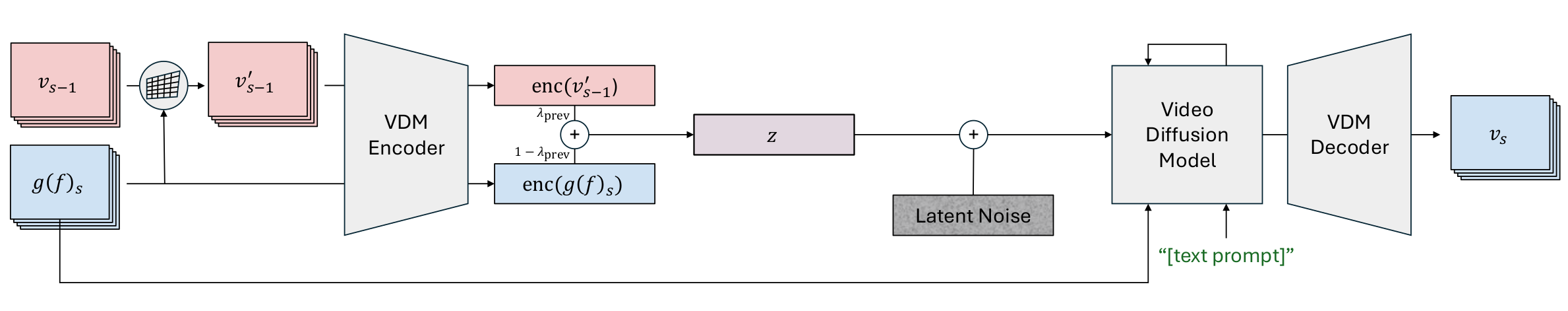

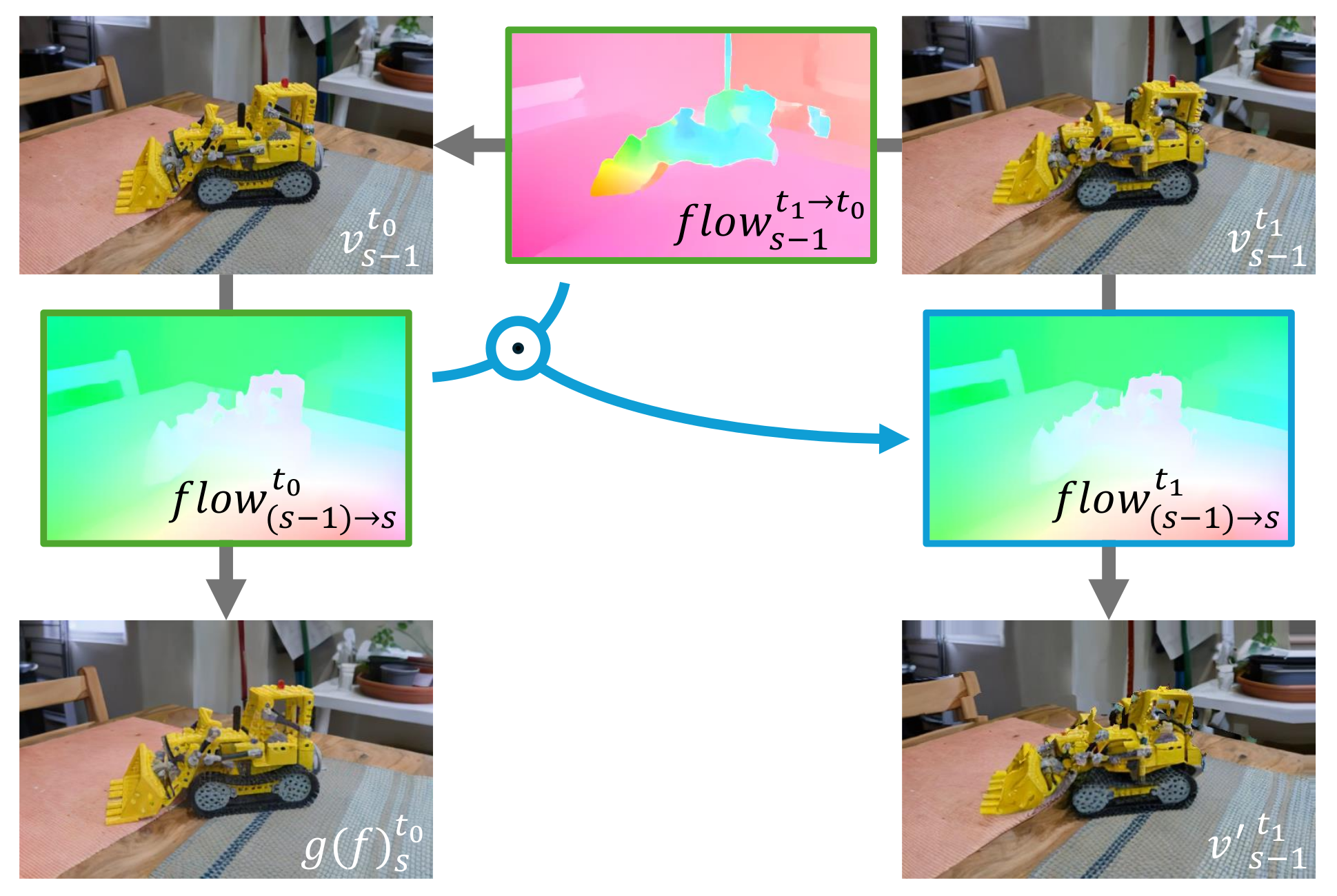

for creating engaging 3D experiences. Recently, novel video diffusion models generate realistic

videos with complex motion and enable animations of 2D images, however they cannot naively be

used to animate 3D scenes as they lack multi-view consistency. To breathe life into the static

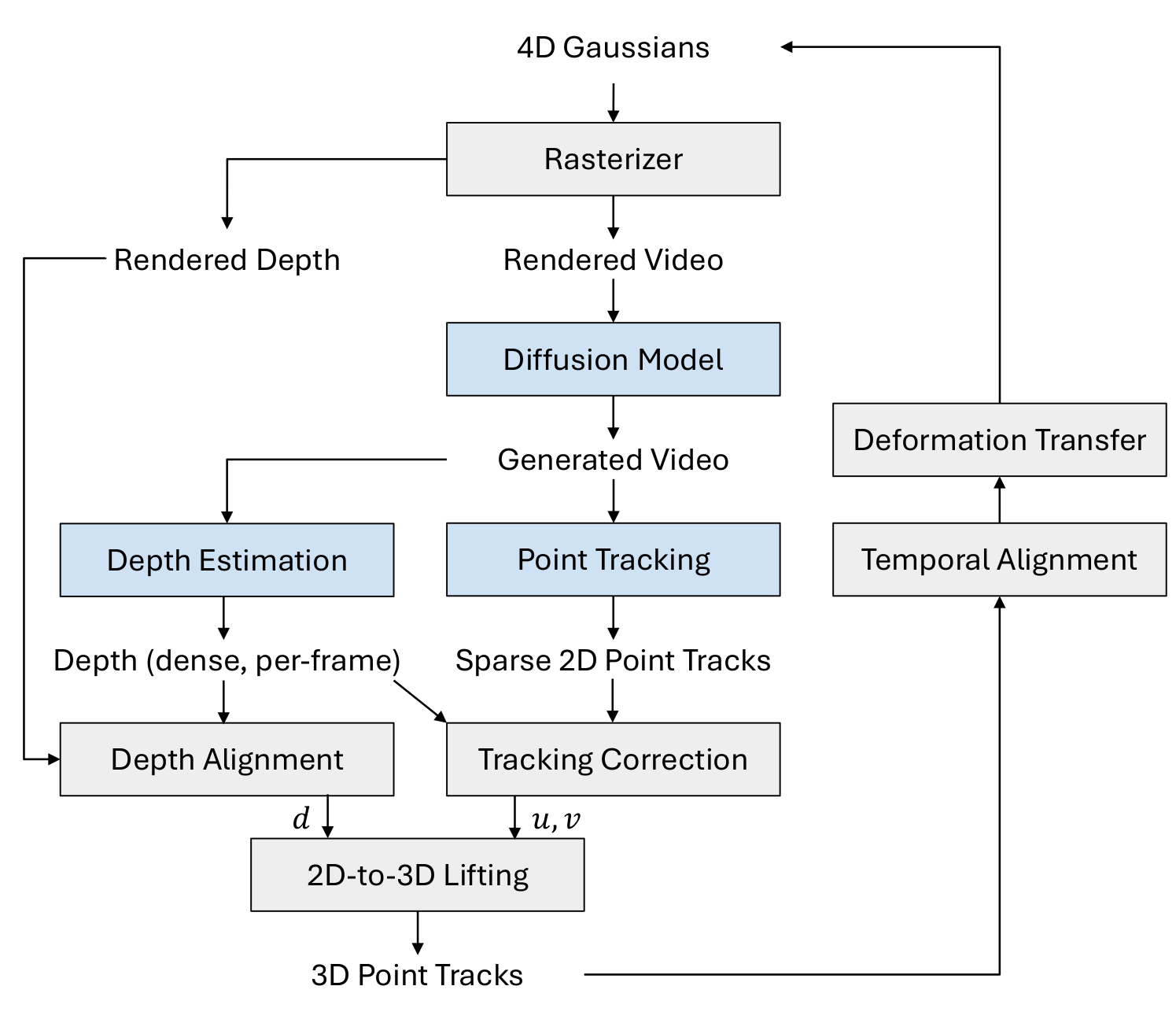

world, we propose Gaussians2Life, a method for animating parts of high-quality 3D

scenes in a Gaussian Splatting representation. Our key idea is to leverage powerful video

diffusion models as the generative component of our model and to combine these with a robust

technique to lift 2D videos into meaningful 3D motion. We find that, in contrast to prior work,

this enables realistic animations of complex, pre-existing 3D scenes and further enables the

animation of a large variety of object classes, while related work is mostly focused on

prior-based character animation, or single 3D objects. Our model enables the creation of

consistent, immersive 3D experiences for arbitrary scenes.

DreamGaussian4D

DreamGaussian4D

Ours

Ours

DreamGaussian4D

DreamGaussian4D

Ours

Ours